Learning services professionals spend a lot of time designing, administering, and analyzing the results of their training programs. They must constantly maintain compliance and monitor changing standards for their organizations.

While all these pieces are part of an effective training program, none of them involves communicating directly with the people most significantly impacted by learning programs: the learners themselves.

Surveys can easily fill this gap by collecting feedback from learners and inputting it directly into an LRS (Learning Record System).

This approach lets you measure objective learning outcomes by asking questions about the topic you hoped to teach, but it also allows you to collect subjective feedback from participants. Such open-ended communication is the absolute best way to create courses that are engaging and effective for your learners.

To help you implement pre- and post-training surveys quickly and easily, we’ve pulled together this handy guide so you can evaluate your programs with accurate data and make improvements based on input from real users.

Why Add Surveys to Training Courses

Particularly when there are standards to adhere to and compliance requirements to meet, it’s easy to lose sight of how important training programs are to the people who take them.

Engaged learners may seem like merely a bonus, but if you can design learning programs that are enjoyable while still meeting your organization’s goals, you’ll reap the benefits of higher levels of participation and better outcomes.

When courses and trainings are designed around participant feedback, you may find that you can reduce costs and overhead by running them less often. If learners enjoy a course, they’re more likely to retain the information; higher retention means fewer repeat classes.

Surveys that integrate seamlessly with an LRS don’t impose an additional burden on learning services teams, either.

They can be administered online, either during the class itself or a day or two after its conclusion. Using the Tin Can API, the survey results can be incorporated into an individual learner’s record. They can also be viewed in aggregate to evaluate the general effectiveness of a course’s design or an instructor’s style.

Long Term Benefits of Surveys for Learners

By collecting feedback from each course attendee and adding to their LRS record, you’ll be able to start identifying patterns which types of classes are most effective for each learner.

Some people will find live, in-person trainings the most engaging, while others will thrive in the more flexible world of virtual, self-paced learning. When you offer pre- and post-training surveys, you’ll know how each person prefers to learn.

This information is invaluable in selecting instructors, course types, class length, and more. It can help improve learning outcomes, and allow learning services professionals to create the best possible curricula for their audiences.

Key Components of a Pre-Training Survey

When designing a survey to administer to your course attendees prior to the class, keep in mind that your goal is to assess their current level of knowledge about the topic that they’re learning about.

The exact length of your survey will vary based on the complexity of the course and topic, but do your best to keep this survey short and to the point. You don’t want people tired of answering questions before their first lesson.

A good rule of thumb is to consider what you plan to do with the results of each question. If you don’t have a clear objective in mind for the question, it’s probably best to omit it.

Regardless of topic or format, be sure to include questions about:

- The most important concepts the course is designed to teach. You need to establish a baseline of understanding for each respondent, and then measure how much improvement there is after the class is over.

- Their personal goals for the class. This type of question is particularly important for professional advancement or elective trainings.

- Personal learning preferences. Some people know which teaching styles appeal to them most; others won’t have a good understanding of their learning style. For those who need help identifying how they learn best, this can be a great opportunity for pro-active attention from a learning services team.

Designing Your Post-Training Survey

After the class has concluded, it’s time to see how it stacked up. This means you need to measure how well it taught the required information, but it’s also an opportunity to get more subjective feedback from participants.

Because of this dual purpose, you may find you get the best data when you divide the post-training survey into two different sections:

- Questions about the key concepts from the course

- Individual opinions about the course content, quality, and instructor

Measuring Course Effectiveness

If a course is required for compliance or training purposes, you probably have a set of key objectives that your learners are expected to meet. You should have covered these in your pre-course survey too, and now you need to repeat those questions to measure participants’ changes in understanding.

While it’s not necessary to repeat the pre-course survey questions verbatim, you should make only minor changes to your language to avoid introducing any bias into the results. Your goal with these questions is simply to measure how much the attendees’ knowledge improved since they first answered the questions.

Evaluating Content and Instructor

Although you are looking for open-ended, personal feedback in this section, don’t just give your students big empty boxes and ask them to put in their opinions. Most people feel overwhelmed by this type of unguided “question” and will simply leave the space blank.

Instead, offer specific questions that will help to focus people’s memories on particular aspects of the class.

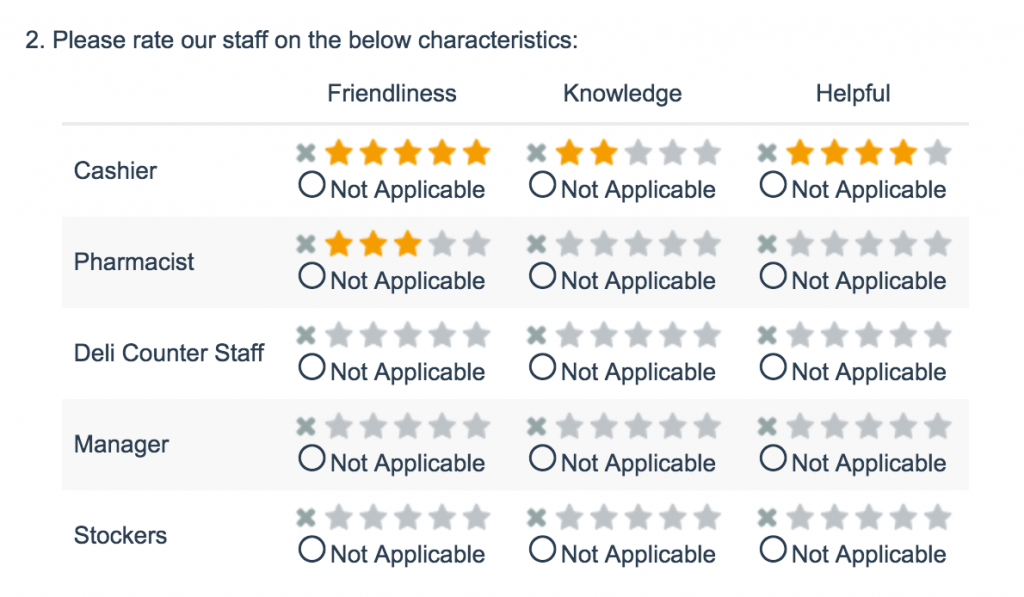

Ranking scale questions can be a great way to collect aggregate feedback about course content and instructor quality:

Survey logic can be a great addition to these questions; for example, you can create conditional questions that appear only for respondents who give very low scores. You might ask these people what they would suggest changing that would lead to a better experience in the next course.

These open text questions can be time-consuming to review, but they often provide the most useful input for improvement.

Integrating Surveys With Your LRS

All of these great survey data won’t do you much good, however, if it’s separated from the rest of your Learning Record Storage data. Fortunately, the Tin Can API makes it possible for survey data to integrate seamlessly with existing LRS and LMS setups.

Alchemer offers a simple yet robust integration via the Tin Can API, so you can harness the power of pre- and post-training surveys in your learning services initiatives.

Have you improved your course offerings based on survey data? We’d love to hear from you! Tell us all about it in the comments.